Why Clusterdyne?

Our mission is to serve biosciences researchers by simplifying access to the vast compute resources of the cloud.

We offer products to easily run CPU and GPU intensive workloads, backed by operational excellence and over a decade of cloud expertise. We offer services to build software pipelines and machine learning workflows on those products. We also manage your AWS infrastructure, amongst other custom cloud engineering work.

Capabilities

Cloud Migration and Builds

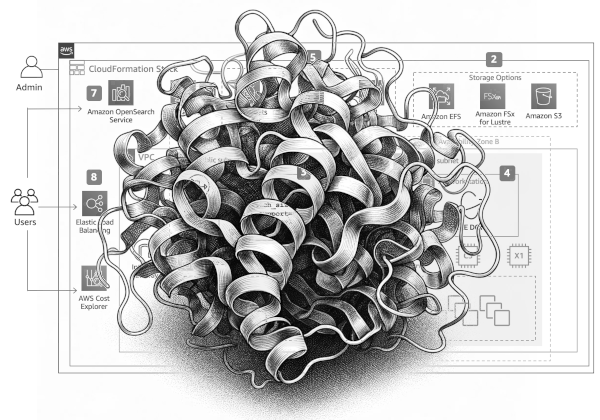

We have successfully migrated complex on-premise systems to AWS, including VPN, VPC, routing, and scaled out on on-demand and spot EC2 instances. For a separate client, Infrastructure as Code using Cloudformation templates on multi-stack deployments, using Puppet for configuration.

Containerized, Serverless, and Auto-Scaling EC2 Applications

We have worked on client solutions running in production with the three predominant AWS application architectures: I] Elastic Load Balancer backed EC2 microservices. II] Containerized microservices orchestrated on ECS/Fargate/EKS. We have also worked with multi-tenant corporate PaaS (Platform as a service) with a continuously updated reference architecture. III] Serverless applications using AWS SAM and the Serverless Framework.

Data Engineering

We have ported and parallelized proteomics data pipelines to the BioCluster and the BioWorkflow workflow platforms. We have built high performance data pipelines using the pydata ecosystem (pandas, numpy) for an energy trading desk. We have also built scaled out simulation infrastructure on EC2 for backtesting quantative trading strategies on wire captured L2 futures data for a Chicago based market making firm.

Devops, Automation, and Continuous Integration/Deployment

We use AWS CodePipeline/CodeBuild/Deploy or Github Actions with custom AWS runners to minimize the time between a code commit and the time its available in production, tested. We automate tasks to reduce human error in critical systems, and implement monitoring and alerting for post release operations.

Team

Ran Dugal

Ran's work experience spans two decades building infrastructure for trading systems, cloud solution architectures, data pipelines, and devops automation. He built solutions for deploying quantitative models at scale at market participants that traded material percentage of daily volumes -- where the key operational metrics are uptime and latency and the cost of errors can be enormous. He also built key pieces of a high-throughput network security gateway at IMLogic (acquired by Symantec). He completed his first AWS Solutions Architect certifications in 2014, and regularly re-certifies to stay current.